This Article evaluates the distinctions between CPUs, GPUs and TPUs in AI processing together with their structural frameworks and operational characteristics and their utilization for machine learning applications and computational intelligence purposes.

Artificial Intelligence (AI) functions as a technological movement that transforms multiple industries while propelling technological evolution throughout the marketplace. AI's rapid advancement depends on customized hardware technology for performing sophisticated calculations. The CPU provides the standard foundation for computing systems but GPUs introduced parallel processing capabilities which make them superior for tasks in AI and machine learning. The Tensor Processing Unit (TPU) represents a hardware solution that optimizes AI workload performance as well as deep learning operations. The article examines how CPUs, GPUs and TPUs differ from each other by discussing structure together with system speed capabilities and the system's boundaries. Through the study of processor differences in AI capability readers obtain knowledge to choose hardware platforms appropriate for their AI workloads. AI advancements will continue to enhance the debate about optimal processing units which will shape how we progress toward machine learning and computational intelligence end goals.

Understanding CPUs (Central Processing Units)

It is the primary processor unit of computers today the Central Processing Unit function as the brain of these machines. The CPU stands as the fundamental hardware part that controls program execution together with the organization of software application operations.

Definition and Role

The CPU functions as a flexible processor which carries out instructions one after the other. The CPU functions to receive instructions through memory then performs the numerical operations specified by running software. All modern electronic devices utilize CPUs for performing basic computing operations and these processors are present in desktop computers, laptops, smartphones and tablet devices.

Structure and Architecture

The two essential CPU components are the arithmetic logic unit (ALU) that operates mathematical and logical operations and the control unit (CU) which directs data flow across the processor. CPUs in the current market feature multiple cores which enable them to execute various tasks simultaneously and produce better performance results. The speed measurement through GHz defines how quickly a CPU executes instructions because this factor directly affects system efficiency.

Strengths

CPUs provide exceptional performance when dealing with operations spanning a wide range of tasks including OS execution and program administration alongside algorithmic computations. This processing capability positions CPUs as an excellent choice to handle applications which need both single-threaded as well as multi-threaded operations.

Limitations

CPUs continue to offer versatility but fail to efficiently handle high-parallel workloads common during artificial intelligence and deep learning operations. Their hardware structure which works best with sequential operations produces inefficiencies when required to calculate multiple computations at once.

Importance in Computing

Computing systems depend fundamentally on CPUs because they supply essential computing capabilities. Modern computing needs continue relying on CPU processing because CPUs maintain their essential position for device operation while providing basic functionality to software systems.

The importance of CPUs in computing is illustrated in its both technical capabilities and operational boundaries in relation to AI along with parallel processing advancements.

Overview of GPUs (Graphics Processing Units)-h3

The main purpose of Graphics Processing Units (GPUs) rests in their function as specialized processors which originally processed graphics for image display. The processors underwent continuous development which turned them into fundamental components for both AI technologies and parallel computing systems.

Definition and Role

As hardware acceleration components GPUs perform thousands of simultaneous tasks with great efficiency because they are constructed to operate through parallel processing. The original goal of GPUs for visual rendering has evolved into their essential duty for AI functions especially deep learning and neural network training.

Architecture and Technical Features

A GPU includes numerous cores exceeding those found in a CPU thus it executes multiple computational operations simultaneously. The architecture works perfectly for executing matrix calculations that underpin machine learning procedure. The high-speed memory capabilities of GPUs provide essential rapid data transfer needed for processing large-scale computational operations.

Advantages in AI and Machine Learning

Vast parallel operations that are common in deep learning model training make GPUs achieve outstanding performance. These processors demonstrate unique power in processing vast datasets with speed that strengthens their value in AI applications which includes image recognition and natural language processing systems. The increasing level of difficulty in AI algorithms finds effective scalability through GPU technology which developers and researchers can utilize.

Challenges and Limitations

The advantages of GPUs do not prevent their use from presenting particular disadvantages. Processors of this kind cost more than CPU units while also demanding greater energy use during operation. Due to their specific design GPUs offer restricted benefits for performing broad computational operations. The requirements for applications that are not linked to AI or graphics rendering make CPUs a more suitable choice than GPUs.

Significance in Modern Computing

The technological base of GPUs forms fundamental requirements for AI research development and data-cantered program building. Science, video games and self-driving vehicles along with cloud platforms attained ground-breaking developments by incorporating GPUs into their operations. The entire economic sphere utilizes GPU technologies to gain fast and efficient operational outcomes at every industry level.

GPUs play a foundational role in computing households because they provide superior parallel processing ability from their resilient structure to meet the needs of performance-demanding applications.

Deep Dive into TPUs (Tensor Processing Units)

The Tensor Processing Unit (TPU) serves as specialized hardware which operates best to execute artificial intelligence models. Designed by Google in 2016 and it required more processing power to support complex artificial intelligence applications.

Origin and Purpose

TPUs became Google's initial device for machine learning speed enhancement when they made their debut in 2016. TPUs run distinctive operations than CPUs and GPUs because they are specialized for processing tensors which form the foundation of deep learning. The processors have purpose-built design capabilities that improve neural network training speed while supporting inference processes.

Key Design Principles

The primary feature of TPUs is their ability to process vector operations together with matrix multiplication since these operations are fundamental to artificial intelligence principles. The system operates using low arithmetic formats like bfloat16 that allows operators to execute fast computations with minimal power usage. The technical system integrates high-energy efficiency features with speed improvements to achieve efficient operations during large-scale processing runs.

Advantages for AI Workloads

Though fast performance speeds it serve as the primary benefit of TPUs and they can also undergo scalability improvements. The system achieves efficient data management of massive information volumes thus leading to faster AI model training processes. TPU pods create TPU clusters through their networked connection to expand their performance capacity for processing challenging resource-demanding operations. These processors minimize energy use to deliver cost-effective operations as well as environment-friendly computer systems.

Limitations

The advantages of TPUs extend throughout their system but they contain some limitations regarding processing speed. Specialized processor applications deliver particular services with functionality constraints unlike Central Processing Units (CPUs) or Graphics Processing Units (GPUs). Specialized AI algorithms execute optimally on TPUs but this device type performs poorly on basic calculations since its processing range is restricted.

Applications and Real-world Impact

The powerful artificial intelligence solutions composed of natural language processing, recommendation systems and large-scale image analysis function with the support of TPUs. Google's large-scale AI cloud services gain maximum value from TPUs because these accelerators help advance their research operations and enterprise performance.

The hardware developer gains precise AI performance from TPU-designs due to unique engineering which drives technological advancement. These systems drive technology advancement in machine learning by breaking through previously impossible boundaries in the field.

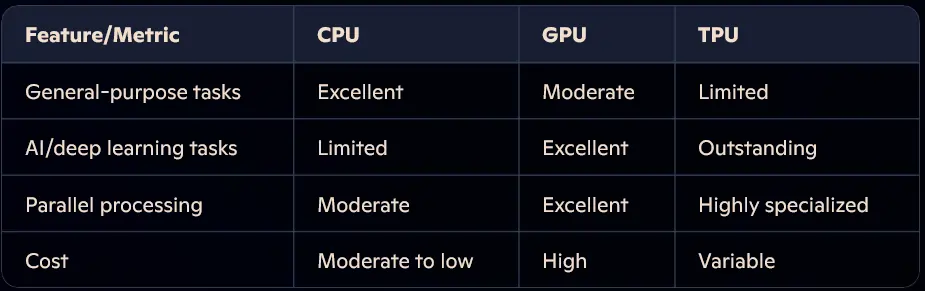

CPU vs. GPU vs. TPU: A Comparative Analysis

AI applications demand suitable hardware processing units for businesses to perform specific tasks because of their increased importance. Different computing requirements find their best match with modern digital devices consisting of CPUs GPUs and TPUs since each device serves unique functionalities.

Performance Benchmarks

CPU data processing delivers top performance when executing application and operating system management because it sequences computations. A combination of parallel workloads operates best through GPUs instead of CPUs which demonstrates GPU suitability for deep learning training tasks. TPUs function at peak speed and scalability to perform tensor calculations which makes them ideal for neural networks both before and during AI training situations.

Architecture and Capabilities

A CPU includes optimized core count to handle individual-threaded processing of parallel applications. The GPU architecture uses hundreds or thousands of cores because it enables the execution of multiple calculations at once. TPUs contain a specialized structure optimized for effective matrix processing to enhance essential tensor applications in machine learning workloads.

Cost-Effectiveness

The CPU technology stands as the cost-efficient option for regular computing tasks because it enables high performance flexibility without increasing prices. GPU devices charge more than ordinary processors yet they provide exceptional computing power for graphics generation alongside AI applications. The approach of pay-per-use cloud services enables organizations to obtain TPU power because these processors charge based on a variable cost structure that reduces hardware requirements.

Energy Efficiency

Computers that handle basic tasks depend on CPUs powering their operations but remain ineffective when processing parallel instructions. Computational energy consumption of GPUs grows because of their advanced capabilities even though these processors remain essential for AI data processing applications. Large-scale system implementations benefit from TPU devices which offer superior operation efficiency per calculation while settling within the spectrum range.

Best Use Cases

Versatility needs CPUs to accomplish edge computing and lightweight models processing work. The performance field of computer vision and natural language processing and gaming belongs to GPU systems. TPU hardware finds its best applications in large-scale model training operations combined with cloud deployments and machine learning project scalability needs.

Processing units require each other to play their essential roles within the computing environment. Users can harness the power of computational resources by comprehending these different processing units that will enable them to make better decisions related to AI and its applications.

Real-world Applications

CPU and GPU along with TPU technologies enable transformative solutions that serve many different practical applications. Processing units have unique functionalities that help solve particular problems in computing systems.

CPU-powered Applications

CPU acts as the fundamental component for traditional computing because it performs well in tasks defined by their flexibility. The operating system depends on CPUs to execute basic functions including tasks that support application control and tasks required to use web browsers document editing applications and send emails. The local processing capabilities of edge computing depend on CPUs to handle smartphones and IoT devices as well as embedded systems before the need for constant internet access begins.

GPU-powered Applications

The extraordinary ability of GPUs to handle parallel processing transforms various commercial industries. The market segments of gaming and 3D rendering remain under their control because GPUs provide superior graphics performance and complete visual immersion. The entertainment sector relies on GPU technology but AI applications need them to function because GPUs handle large image volumes for computer vision and natural language processing requires GPUs to run chat-bots and translation operations. Science based research becomes better and faster through GPU acceleration which helps scientists produce more accurate studies in fields that may include climate model analysis and molecular dynamics.

TPU-powered Applications

The design of TPUs aligns specifically with artificial intelligence applications and machine learning operations therefore establishing them as essential building blocks for current AI developments. Through their exceptional ability to train big neural networks these devices push advancement in healthcare research through medical image-based early disease detection systems. TPUs drive cloud-based AI solutions that deliver scalable intelligent system development abilities to businesses and researchers. TPUs serve retail businesses to optimize recommendation systems that deliver personalized product suggestions to customers.

Hybrid Deployments

Multiple processing applications currently exist which work together effectively with these processors. Evaluating vehicles through autonomous control depends on CPUs but GPUs detect objects that TPU units enable decision making. A powerful combination between elements reveals their distinctive solving abilities for complex multi-disciplinary obstacles.

Conclusion

The technological framework requires all three components because they process different computational operations to advance CT systems. General tasks rely on CPUs for flexibility but GPU parallel processing capabilities remain essential to operate both AI applications and graphical processing. The specialized nature of TPU tensor calculation processing enabled a speedup of deep learning models training processes. The effectiveness of computational operations depends on user understanding of the distinct characteristics together with operational limits of individual processing resources. Machine learning together with computational intelligence continues its development path by creating innovative hardware features including TPUs.